We come across ‘dirty’ data all the time. It’s one of the realities of working with data on a regular basis. With the rise of data democratization in the workplace, more people across the organization are exposed to the problem of dirty data and are also more likely to be involved in the cleansing process.

In this article we’re going to look at how non-technical people can perform data cleansing in SQL Server using 2 different methods: SQL Spreads and Data Quality Services.

What is Data Cleansing?

Data cleansing is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting in languages like T-SQL or R.

How does data become corrupt or inaccurate (ie “dirty”) in the first place? Some of the more common reasons are listed below:

- Human error during manual input

- Corruption during data transfer

- Different data definitions being used in sources of merged data sets

- Different formats (eg dates) being used in sources of merged data data sets

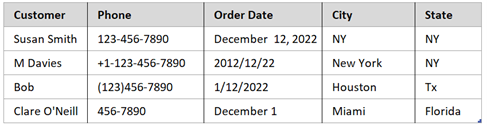

The type of data that we’re talking about here is often master data or reference data. Here is a very simple example of some data that needs to be cleansed:

The data cleansing process for this example may include the following:

- Normalization of the Phone number field to use a standard format of {area code-XXX-XXXX}

- Formatting all dates to be {YYYY-MM-DD}

- Looking up the ‘approved’ values for City and State (eg finding that “NY” is a synonym for New York)

- Rejecting the 4th record because the Order Date value is incomplete (note that we may choose to accept the 3rd record even though the Customer name is not complete as it may not be necessary to have Last Name)

So, we can summarize the process in the following way:

- Correct typographical and formatting inconsistencies/errors

- Validate and correct values against a list of know entities (this can be strict or fuzzy)

These steps require that there are some business rules or knowledge base that we are going to be checking against. On a small scale, this could involve someone looking through data records and manually making changes based on their domain knowledge. At larger scales, a combination of computer-assisted and interactive methods is usually employed.

Why is data cleansing important?

Incorrect, inconsistent data can result in organizations making poor decisions which could negatively impact performance. Many organizations now recognize that having high quality data is a pre-requisite for doing business.

High-quality data is related to a set of criteria which are usually defined as:

- Validity: how well the data conforms to defined business rules or constraints. This is often a problem when data is entered into spreadsheets that have inadequate data entry validation. Some examples of constraints include: data-type (e.g. a value must be a decimal), range constraints (e.g. a percentage must be between 0 and 100), uniqueness (e.g. an ID field must be unique across a data set) and foreign-key or set-membership constraints.

- Accuracy: The degree of conformity of a measure to a standard or a true value. This is normally hard to achieve via data-cleansing because the ‘true values’ are not always available. One example is where zip code data is checked in an external database to see if the entered street address is valid for the zip code.

- Completeness: In situations where data is incomplete, it is normally appropriate as part of the data cleansing process to designate values as “missing” or “unknown”.

- Consistency: The degree to which a set of measures are equivalent across systems. In situations where data contradicts each other across systems, it is necessary to decide which data is correct.

- Uniformity: how well the data conforms to a standard unit of measure in all systems. In situations where datasets are from different regions, fields like distance may be recorded in either metric or imperial units, and so need to be converted.

Who is normally responsible for data cleansing?

As we’ve seen, data cleansing is a key part of the data management process within an organization. The question as to who is responsible for data cleansing depends mainly on the size of the organization and the structures relating to data management.

In large organizations, there will often be some sort of ‘data services’ team that is responsible for all aspects of data management, including making sure that data is of a high quality (i.e. clean). In these cases, data cleansing is often done by data quality analysts and engineers or other data management professionals, but it can be delegated to BI analysts or business users as appropriate. In fact, it is often better that business users are involved in data cleansing, as they have more domain knowledge and a keen interest in making sure the job is done well.

The extent to which business users are involved in data cleansing can also depend on the skills needed to do the job, as well as the culture and norms of the organization. It may be that only technical resources have permissions or access to make changes to data in databases. In these cases, business users need to log requests through to the data services team in order to get dirty data cleaned up.

In the next sections we’re going to look at 2 options for data cleansing in SQL Server which are aimed at non-technical users.

Data cleansing in SQL Server using Excel and SQL Spreads

Excel is often used in data wrangling and data cleansing processes by a variety of users using an array of techniques. The SQL Spreads Add-In for Excel makes this easier by allowing you to directly make updates to data in your SQL tables from within the familiar Excel UI.

SQL Spreads has the following features to make your data cleansing jobs simpler and easier:

- easily connect to tables in SQL Server and view and change data directly from within Excel before saving back to SQL

- use Excel files to review and clean data and then create new tables in SQL Server with the push of a button

- use lookup columns and drop-down lists to ensure users enter valid values

- use ‘post-save’ queries in SQL Spreads to validate data before it is pushed to your SQL tables

We’ll briefly look at these capabilities.

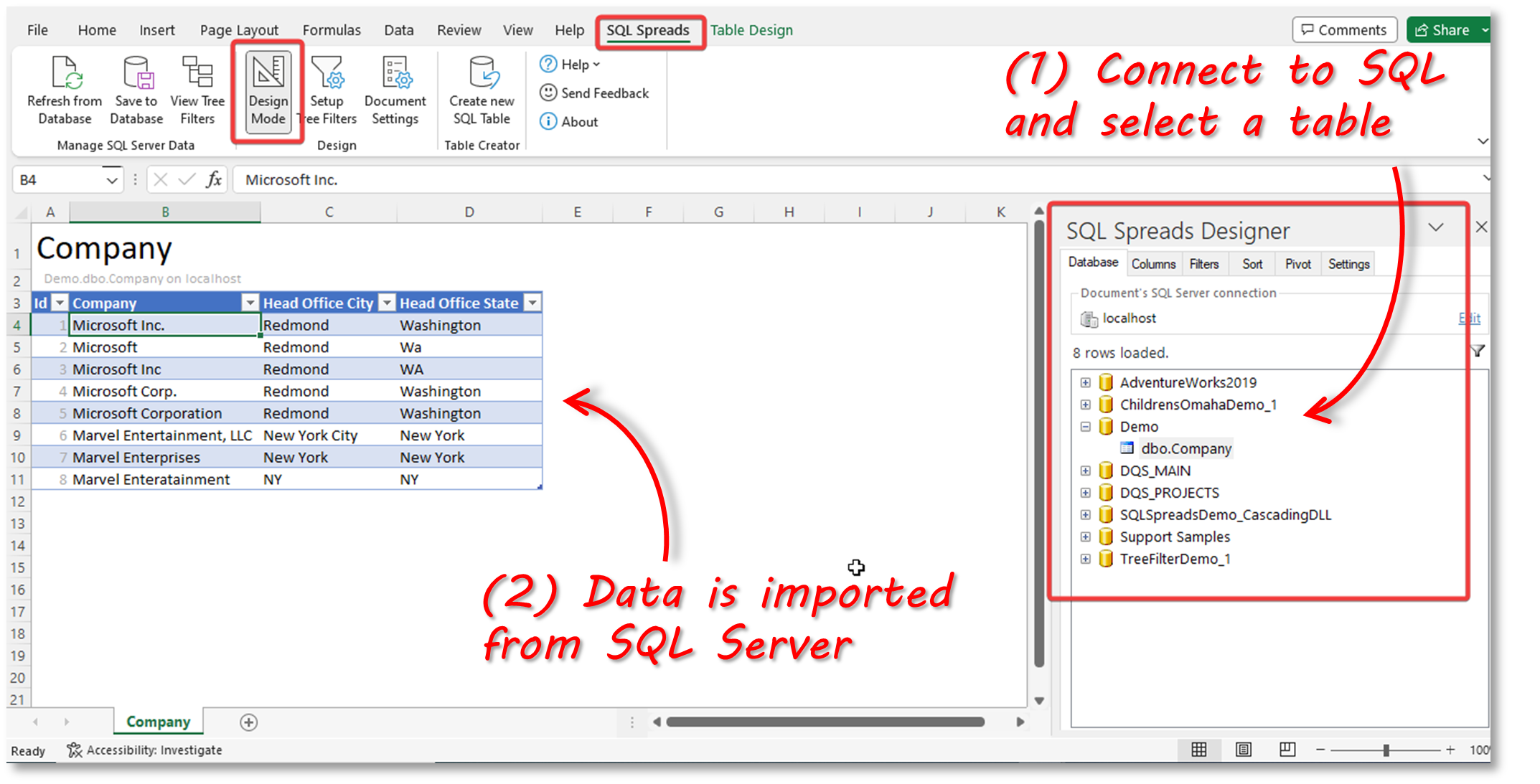

Import data from SQL Server, clean in Excel and save back to SQL Server

Using the SQL Spreads Add-In for Excel it is very easy to create a connection to SQL Server and then import data from a table. The screenshot below shows the SQL Spreads Designer pane – to import the sample Company table I just needed to expand the relevant database and then click on the correct table; once selected, the data is loaded into Excel.

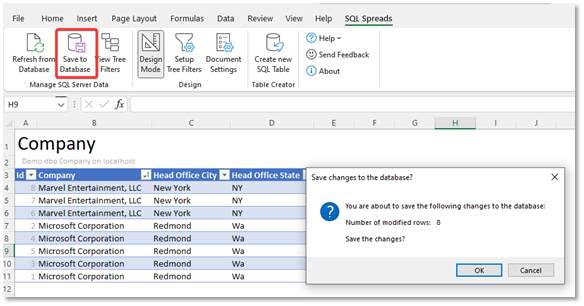

Once the data is in Excel, I can work on cleaning it up. In this simple example, I’ve copied and pasted the ‘correct’ versions of the company names and standardized the City and State names. It’s then just a case of clicking on the ‘Save to Database’ button to have the data written back to the table in SQL Server.

Clean data in Excel and create new tables in SQL Server

In data cleansing, it is common to get sent one or more tables of dirty data in Excel or CSV and be tasked with cleaning the data and inserting it into a table in SQL Server.

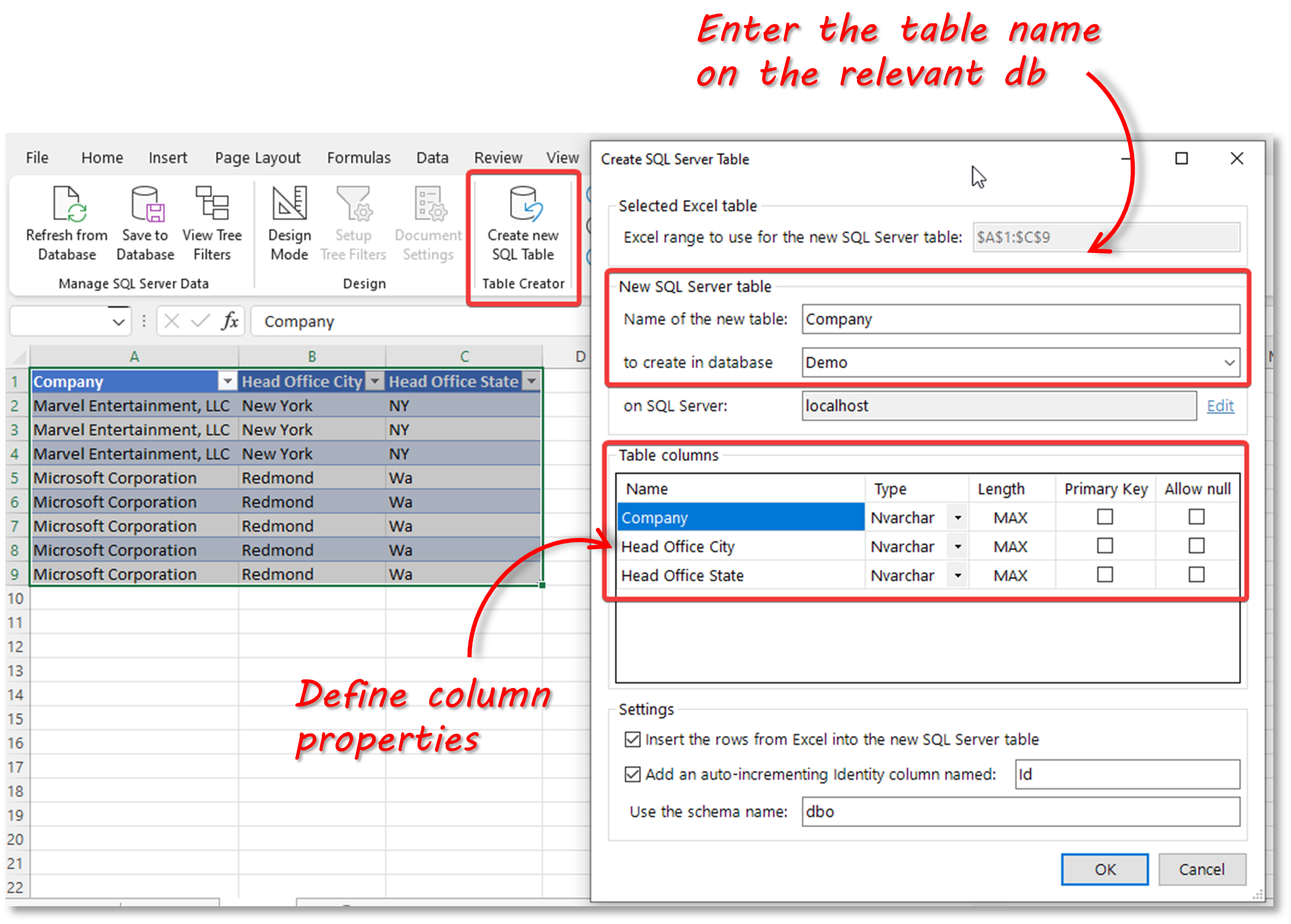

SQL Spreads has a feature called ‘Table Creator’. Once you have cleaned up your data in Excel, all you need to do to get the data into SQL Server is click on the ‘Create New SQL Table’ button. You’ll be prompted to enter a table name and select a relevant database. You can also define the column properties.

When you click ‘Ok’, the table, along with the data, will be created in SQL Server.

When you click ‘Ok’, the table, along with the data, will be created in SQL Server.

Use lookup columns and drop-down lists

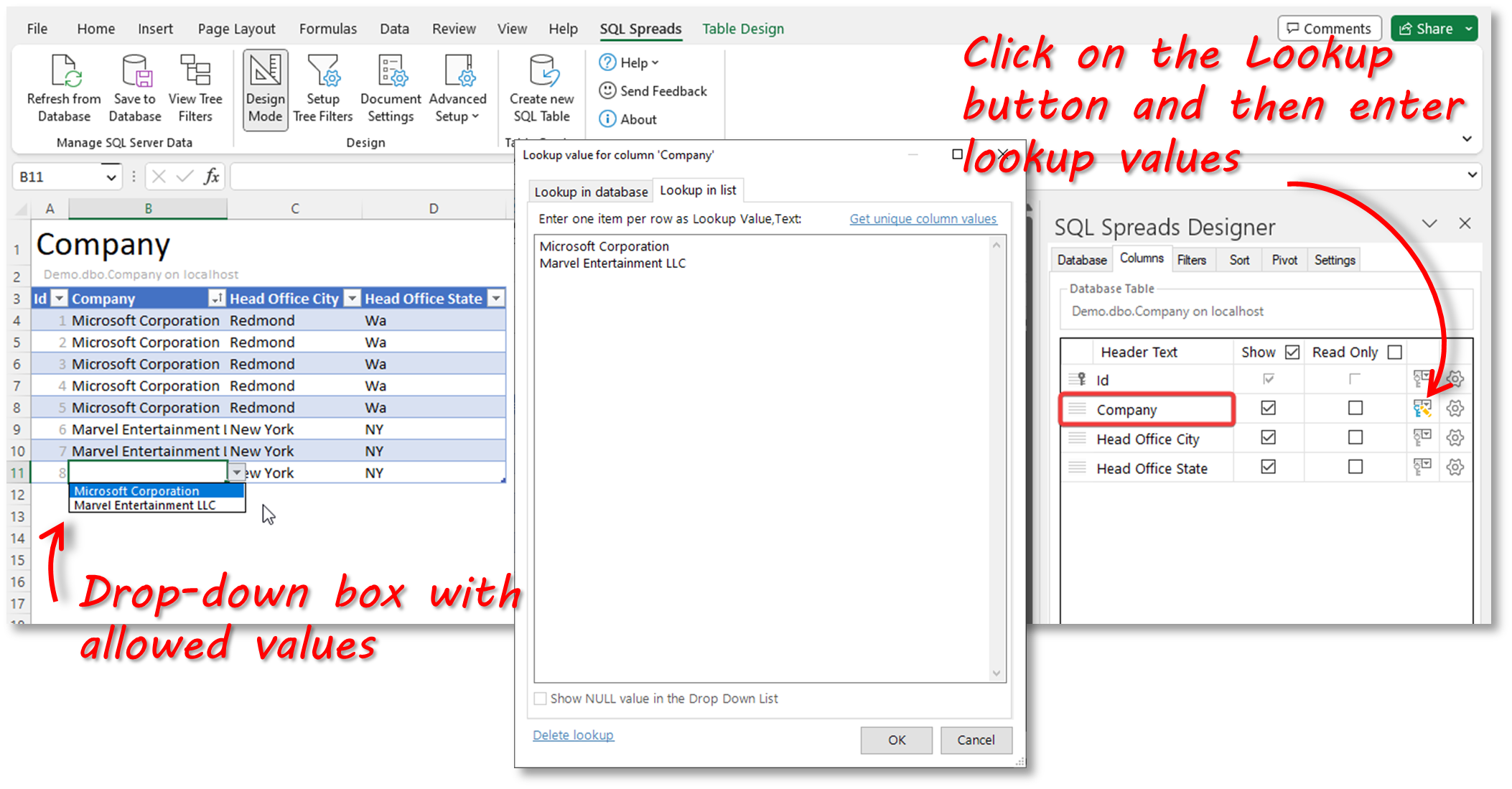

You can prevent users from entering invalid data during the cleansing process by using drop-down lists in columns. The drop-down list can use values from a fixed list that you specify, or from a column in a related table.

Lookup Key values from a fixed list

This approach is useful if you just have a few values in the lookup list. You can enter a key/text pair , in which case the text value is displayed in the drop-down, whilst the corresponding key value will be written to the database. Alternatively, you can enter text values only, like in the example below.

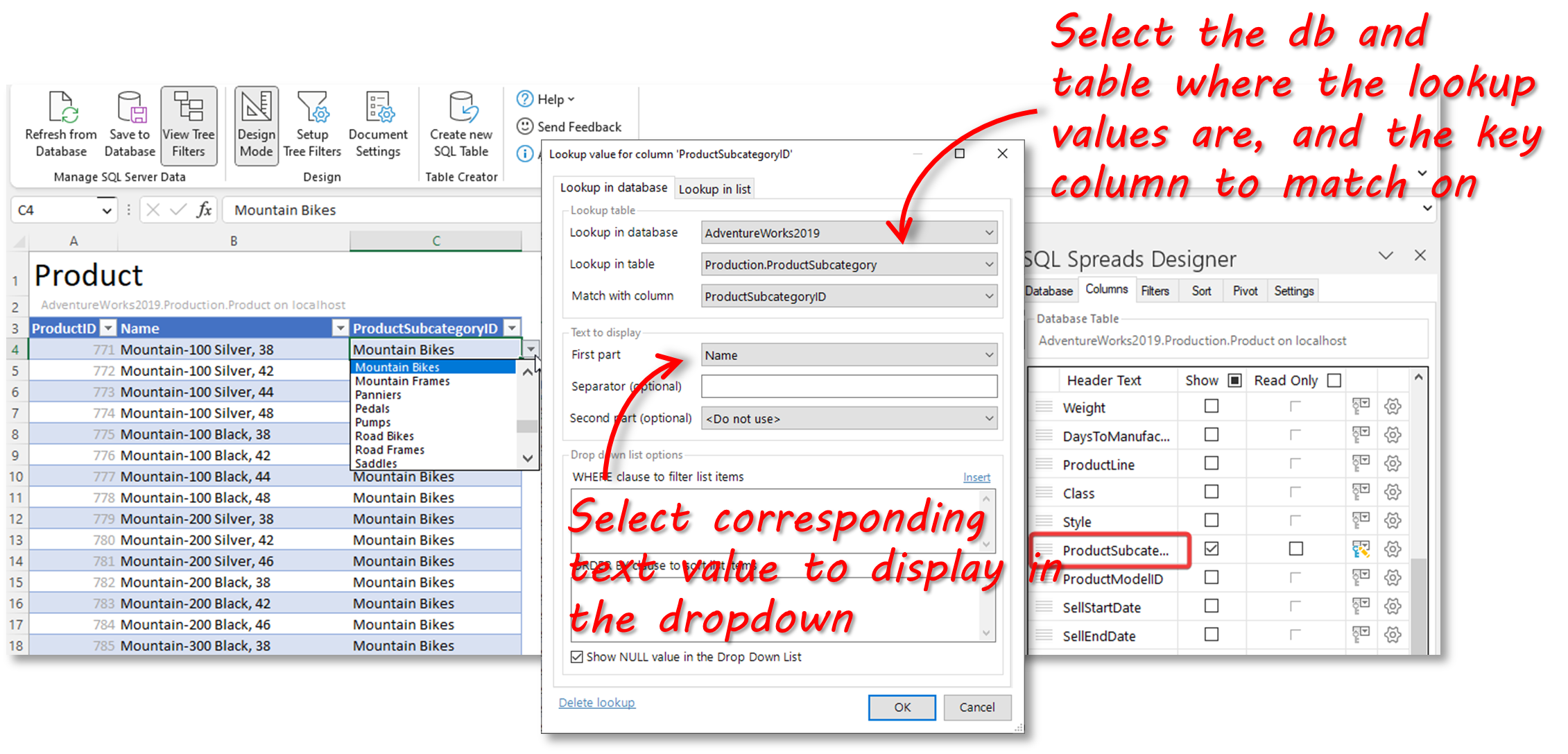

Lookup Key values from a database table

You can also create a lookup that retrieves key values from a related table. For example, you may have an Orders table that contains customer orders and which has an ID value in the Customer column. It would not be practical for users that are trying to cleanse the data to have to work with Customer ID numbers – instead it is much better to display the actual Customer name from the related Customer table.

The screenshot below shows the steps to do this in SQL Spreads:

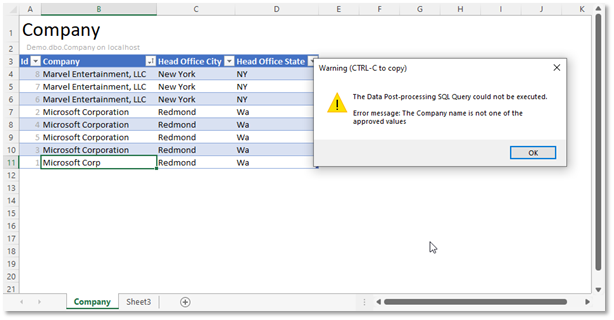

Use Post-Save queries to validate data

With SQL Spreads, you can easily add SQL data validation rules from within Excel using a data post-processing script.

The Data Post-processing script is a SQL query that will be executed as the last step in the transaction that updates the database with the changes made in Excel. By default, SQL Spreads will always validate all entered values against the Data Types in SQL Server. The process described below is a more advanced option that is similar to the CHECK constraint in SQL Server.

The example below shows a Post-Save SQL query that checks the Company field to see if the contents match the ‘approved’ values:

IF (

SELECT COUNT([Company])

FROM [Demo].[dbo].[Company]

WHERE Company NOT IN ('Microsoft Corporation', 'Marvel Entertainment, LLC'))

> 0

RAISERROR('The Company name is not one of the approved values',16,1);The screenshot below shows the validation in action:

Data cleansing in SQL Server using Data Quality Services

Data Quality Services (DQS) is a component of the SQL Server suite. You can install it as an additional feature as part of the installation process.

DQS is made up of two components:

- Data Quality Server: this is installed on top of the SQL Server Database Engine, and includes three databases:

- DQS_MAIN contains DQS stored procedures, the DQS engine, and published knowledge bases.

- DQS_PROJECTS contains the data quality project information.

- DQS_STAGING_DATA is the staging area where you can copy your source data to perform DQS operations, and then export your processed data.

- Data Quality Client: this is a standalone application that enables you to connect to Data Quality Server, and provides you with an interface to perform data-quality operations

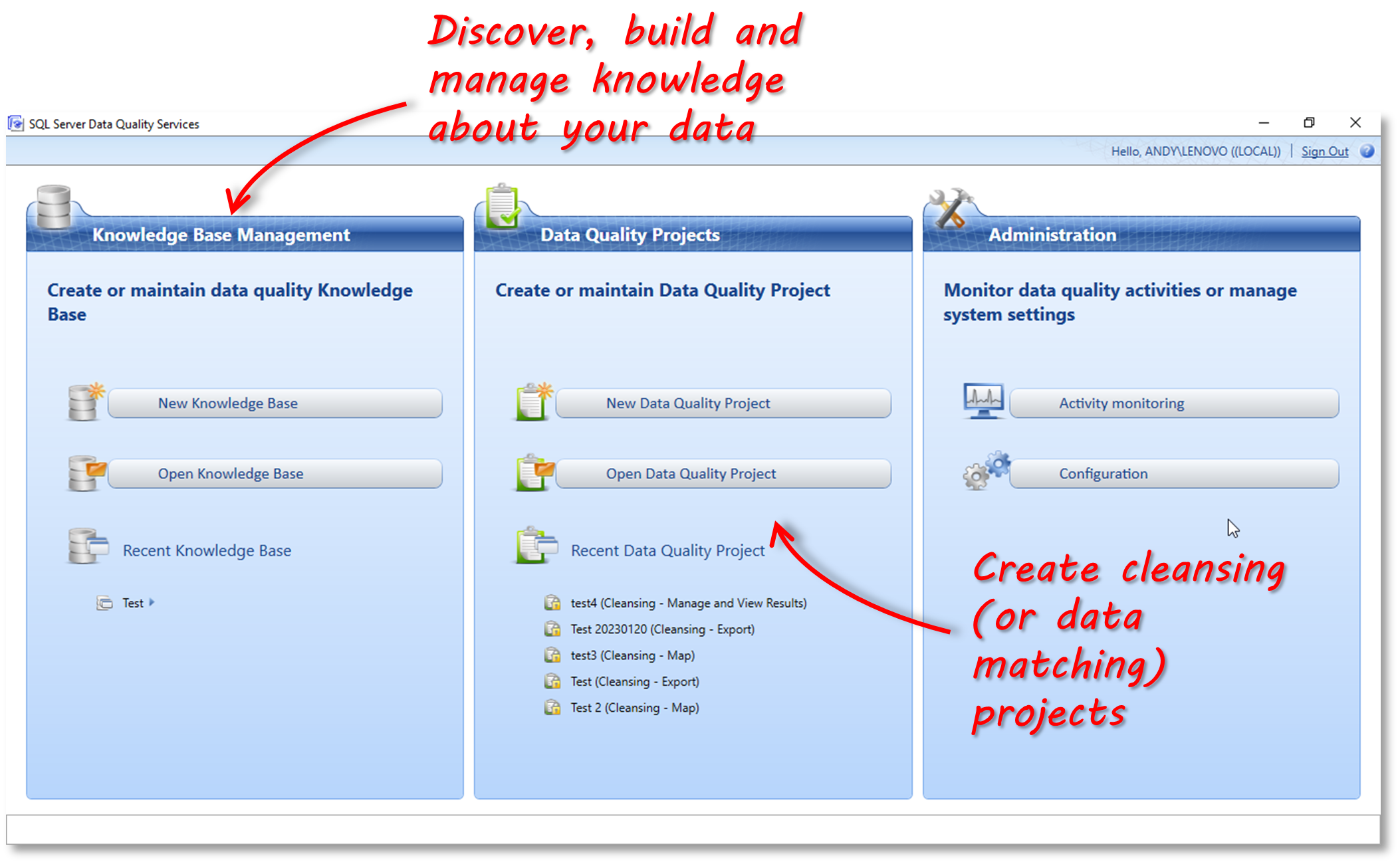

In simple terms, you use DQS to discover, build and manage knowledge about your data. Using this knowledge, DQS can then be used to cleanse your data using computer-assisted and interactive methods. The main console of the DQS client shows these two main activities (along with an Admin section).

Knowledge Base Management

DQS uses a knowledge base (KB) to identify incorrect or invalid data. The knowledge base is essentially the ‘gold standard’ against which you want to check the quality of your data. DQS allows you to create your own knowledge base (i.e. your own company specific knowledge) or you can use reference data from third parties via the Azure Marketplace. When you install DQS, a sample knowledge base containing global and US country and city data is included.

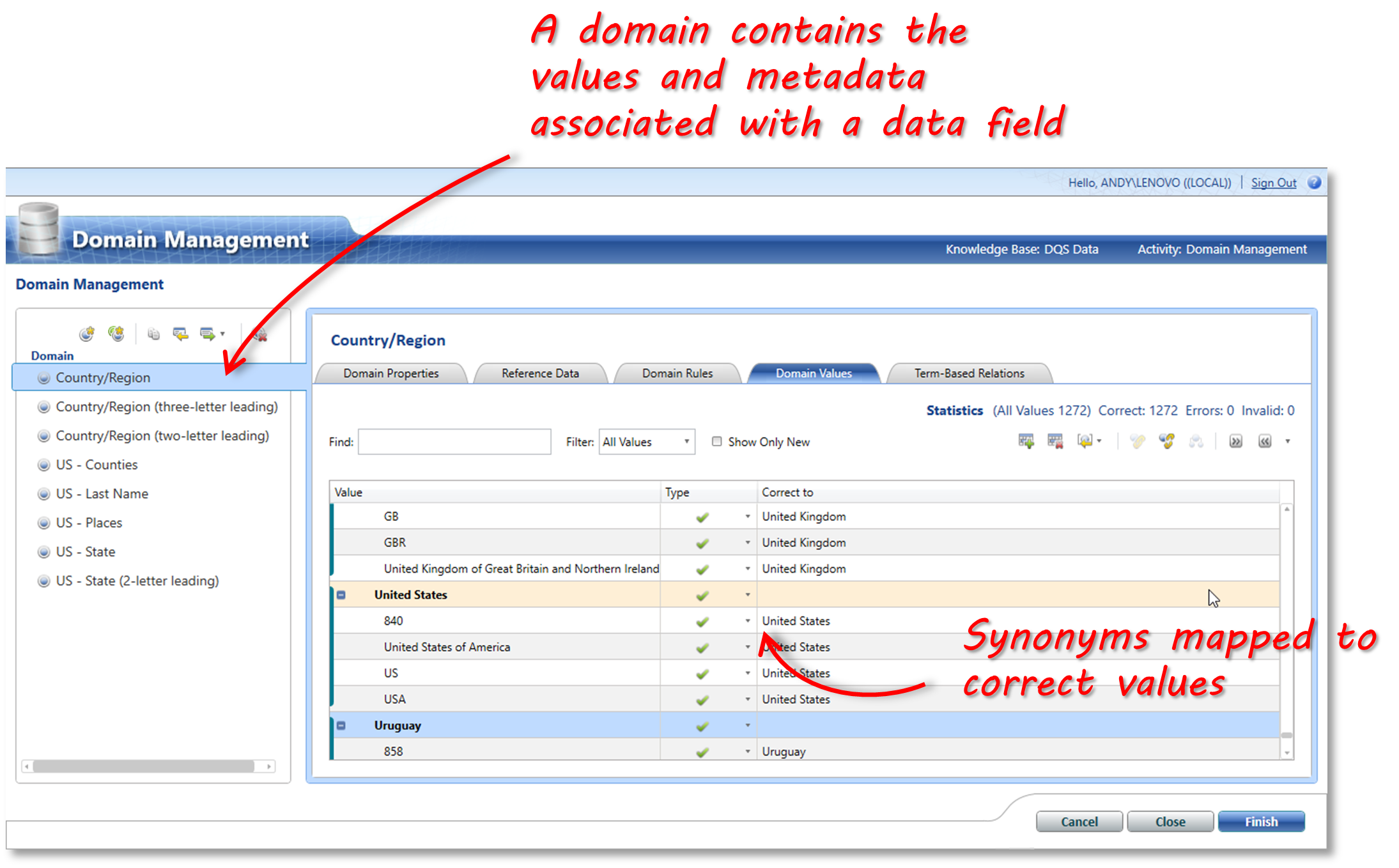

A knowledge base contains domains. A domain contains the values and metadata associated with a data field.

In the sample knowledge base below, you can see that there are several domains, each concerned with the properties, rules and values of fields related to countries and cities. You can see that the highlighted domain will check values for country and can automatically correct any synonyms to the correct value – e.g. change ‘USA’ or ‘US’ to ‘United States’.

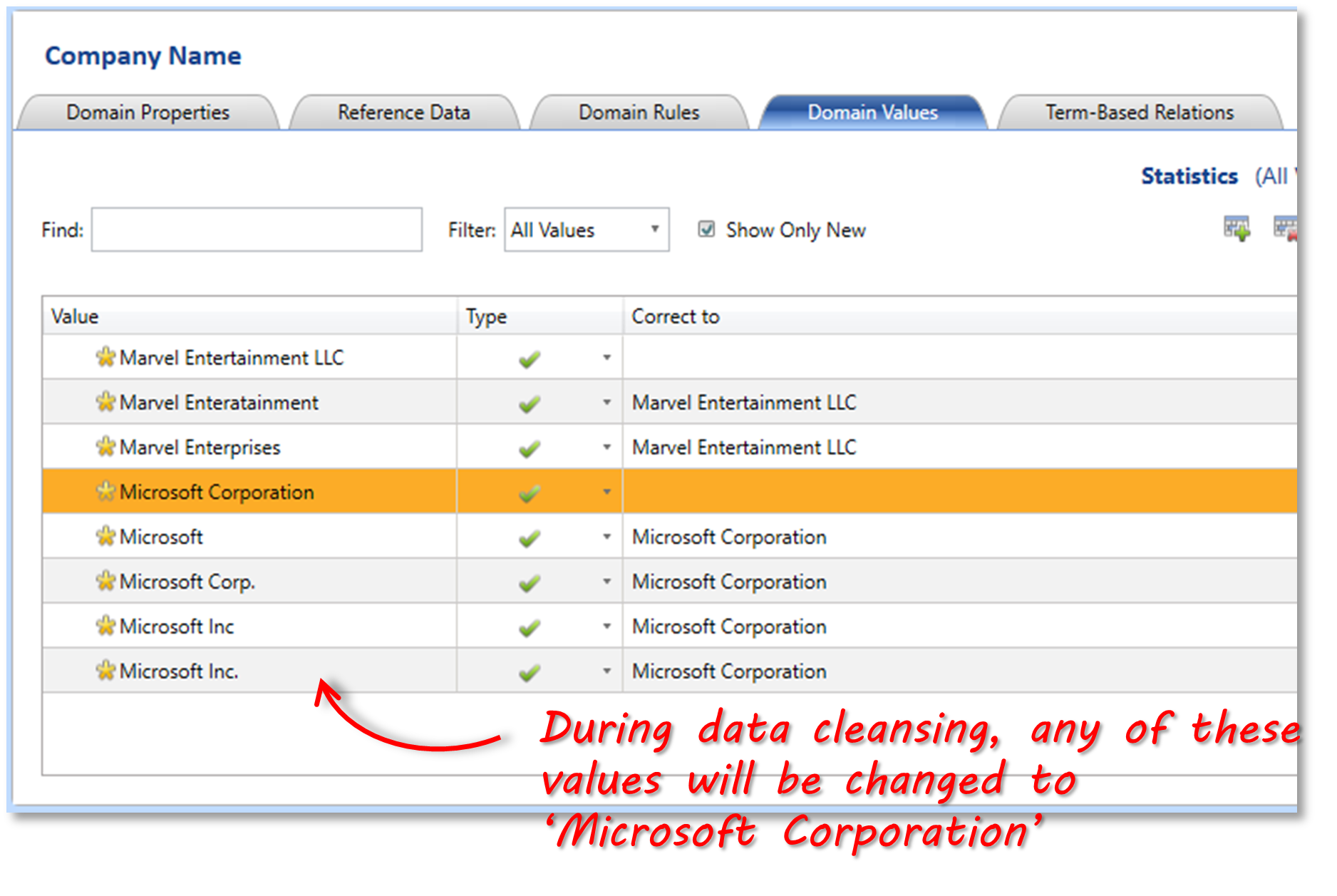

You can also create your own knowledge base domains. In the example below I’ve created a knowledge base domain to check and correct company names.

You can also create your own knowledge base domains. In the example below I’ve created a knowledge base domain to check and correct company names.

Once the knowledge for your data has been defined in the domains within a knowledge base, you can now perform some data cleansing by creating a Data Quality Project.

Once the knowledge for your data has been defined in the domains within a knowledge base, you can now perform some data cleansing by creating a Data Quality Project.

Data Quality Projects

A data quality project is the means of using a knowledge base to improve the quality of our source data by performing data cleansing or data matching activities, and then exporting the resultant data to a SQL Server database or a .csv file.

To cleanse data, we need to create a data quality project. The data quality project contains the following steps:

- user defines the source data (SQL table or Excel file) and the mapping of the columns in the source data to the domains

- DQS performs an automated cleansing step

- user reviews the results and corrects (if necessary)

- user defines the export destination of the results

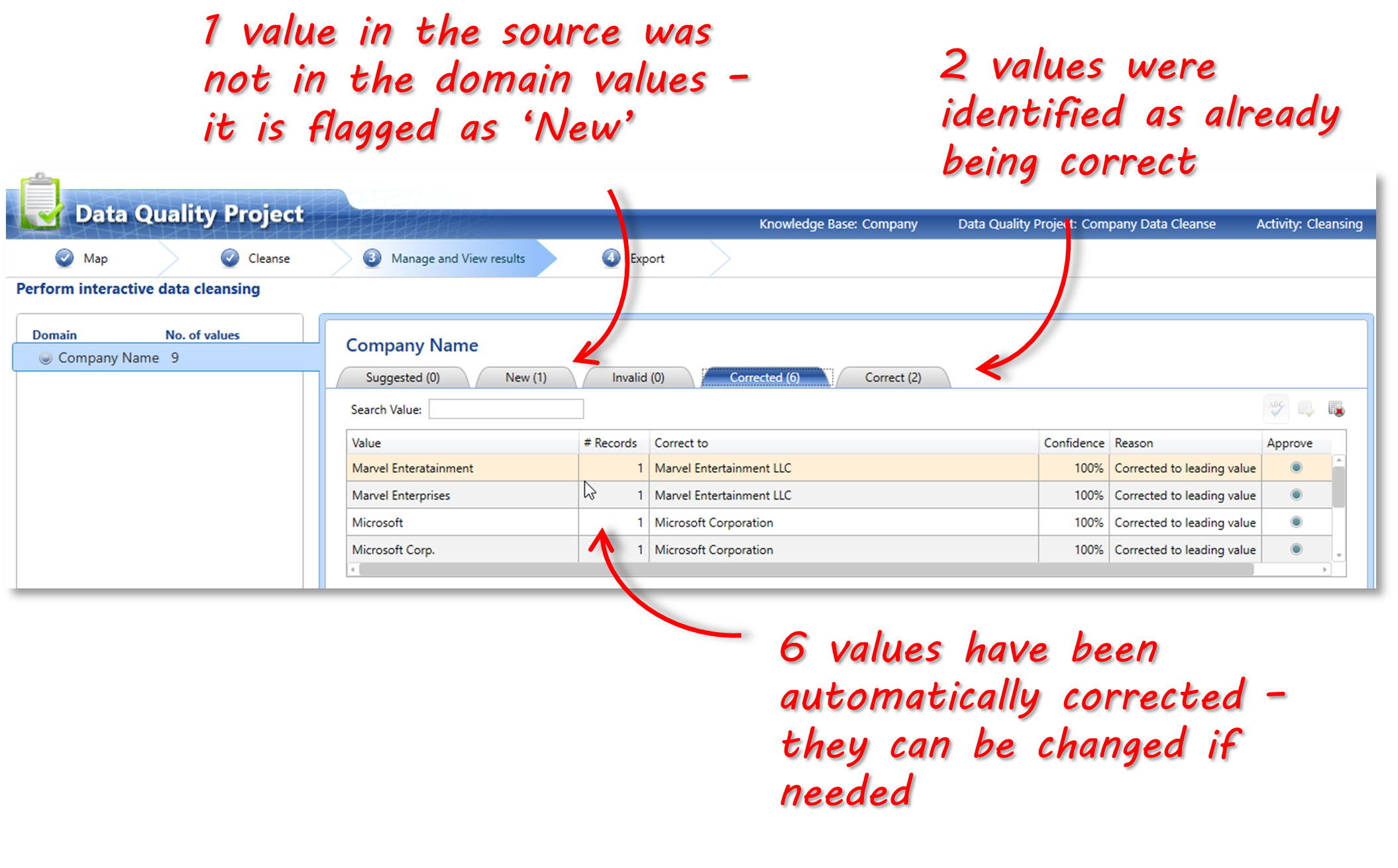

The screenshot below shows the ‘Manage and View Results’ screen – in this you can see how DQS interpreted the data sample (containing 9 records) that I wanted to have cleansed.

- The company name for one of the records (“Msft”) was not found in the Company domain, so it was marked as ‘New’ – I would need to enter what the correct value is for this record.

- Six of the records were automatically changed to the correct (‘leading’) value – i.e. they were synonyms for the company name that are matched to the correct value in the domain.

- Two of the records were correct and needed no actions.

It is likely that during a data cleansing exercise, there will always be a mix of records that are marked as New, Corrected and Correct. In other words, DQS is a combination of automated and interactive data cleansing.

I can now choose to export the cleansed data to a SQL table, or an Excel file

I can now choose to export the cleansed data to a SQL table, or an Excel file

Summary

In this article we’ve looked at data cleansing, and some ways of data cleansing in SQL Server specifically.

Data cleansing is a crucial part of data management. It helps ensure that your data is of high quality. Poor quality data can cause many issues in the workplace and can result in bad decisions being made.

With the rise of data democratization in the workplace, more people across the organization are exposed to the problem of dirty data and are also more likely to be involved in the process of cleaning it. Having tools that can be used by a wider audience, including non-technical users can therefore be very beneficial. In this article, we looked at 2 approaches for data cleansing in SQL Server, as it is one of the more prevalent databases in use.

The first approach used the SQL Spreads Add-In for Excel. SQL Spreads is a lightweight Data Management solution to easily use Excel to update and manage data in Microsoft SQL Server. We looked at four features of SQL Spreads that makes it easy for general business users to perform data cleansing in Excel and save the data to SQL Server.

We also looked at Data Quality Services (DQS) which is a component of the SQL Server suite. It includes two main activities – defining and maintaining knowledge bases that contain the ‘gold standard’ against which you want to check the quality of your data, and the cleansing process itself. DQS is a solid product built on top of SQL Server and would probably mainly be used by data quality analysts and engineers.

To learn more about SQL Spreads, download the trial version here.